Editor's note: MarkLogic data architect Kurt Cagle shared his insights, lacking a good data collection, sorting, and storage process, the results of data analysis can only be garbage. About four years ago, the indispensable business of data scientists emerged. Technologists have thrown away the old statistics textbooks in college, spent a lot of time relearning Python Pandas and R, as well as the latest machine learning theories, and bought new white coats. I know this is what I do. If you have been a Hadoop developer, then data science is also a good place. After all, everyone thinks that a data scientist who can't map/reduce is not a good data scientist. This may even delay the upcoming collapse of the Hadoop enterprise until a few years later, with the Indian programmer workshop concocting thousands of new Hadoop programmers and data science "experts" to catch up with the next big trend. The company pays for this at the highest price. Every company on Nasdaq pays high salaries to data scientists to avoid being overwhelmed by competitors because of hindsight. At the same time, sales managers and executives starting with C can also count on starting the iPad in the morning to see how well the company is running in real time. The control panel used to become a big social status symbol-senior executives enjoy a super luxurious executive panel, based on 3D visualization technology and real-time animated scatter charts, while relatively junior colleagues get a 2D flat version, with minimal summary . However, so far, nothing has really changed. Data scientists (mostly highly educated people with many years of experience in fields like pharmaceutical analysis and advanced materials engineering) will gradually realize that the quality of the data they need to process... well, without any derogation, sucks Up. People are guided, believing that because they have thousands of databases all over the place, their organization has massive amounts of data, and most, if not all, of the data is valuable. Those data scientists will find that, on the contrary, most of the data is outdated, in the wrong format, and the data model is suitable for the application that the programmer who created the data needed at the time. A large amount of data is in electronic forms, which are repeatedly modified without any process, control and foresight. These records are far from the truth. Too much data is one-time data that lacks documentation. The column names will be MFGRTL3QREVPRJ and the keys are absolutely inconsistent. In other words, the data they have is basically useless for any analysis, and it is far from the analysis in the minds of those who are good at analyzing daily test results of pharmaceutical trials. Now you have an annual salary of $150,000 to provide a control panel for sales representatives who don't know anything about statistics but can't do anything that requires millions of dollars and authorization to play. Your data is messy, and there is quite a lot of data that is completely useless, but convincing sales representatives to rebuild the database will scare them because it costs millions of dollars and does not seem unnecessary. Of course you can lie directly to them and assemble a random number generator hastily. Maybe the data provided to them is more accurate than they know. But people who deal with data are not used to lying because it runs counter to their basic goal of being as precise as possible. So what will you do? Now I have to put on the hat of my semantic evangelist and tell you that you should develop a semantic data warehouse. You really should do this, it is not that difficult, but it can provide some tangible benefits. But I would also say that it is not a magical solution. It makes it easier for you to obtain data in an easy-to-handle format (or helps to find out which data is garbage and can be deleted directly). However, the reality is that this is not a data science problem-it is a data quality and ontology engineering problem. So, let me be more clear, so that those who wear executive clothes can also understand. You have data problems. Your data scientist has a variety of useful tools to present the results of data analysis, but without high-quality data, what they produce is completely meaningless. It is not their fault. This is your fault. Every day you expect a cool control panel to win you a $10 million contract is a waste of time and a day watching money flow away from you. Your job is not easy. What you need to do is to first determine what information you actually need to track, and then spend time discussing what data is needed with your data scientist and data ontologist. Don't expect to point to a database, and the data will magically appear there. Generally speaking, databases are used by programmers to write applications, rather than providing in-depth measurements within the company. Sit down and look at the resources you currently have. You need to understand that those who rely on these databases to do their work will be very reluctant to give you access, especially when these permissions might lead them to take responsibility. In addition, you also need to understand that the documentation of most databases is terrible (this is considered good, in fact, most databases have no documentation at all), so you need to detect based on obscure references. This is called pathological computing, and most programmers hate it because it means guessing the brains of other programmers. These programmers are likely to have left their jobs, their level is unknown, and they have forgotten what they mean by writing for ten years. The relational data lake does not solve this problem. The problem solved by the data lake is to allow the same host to access all data. For pathological calculations, this is a necessary part, but it is neither the most difficult part nor the most expensive part. The most expensive part is figuring out what the data really means, or even just identifying the same thing that the scattered data sets are talking about. There is no ready-made solution to this problem. If anyone tells you there is, then they are fooling you. I want to implant again the ads of semantic solutions-graph triple store, RDF, ontology management, etc. These are not out-of-the-box solutions, but are tools that enable pathological analysis to be carried out and put the means to manage these processes in the hands of programmers. However, you need to understand that all this often requires you to rethink the entire process of data flow and understand how to capture information at the beginning and pass it into the appropriate pipeline early. It requires your programmers and database administrators to give up partial autonomy, based on a centralized joint storage work. It also means that you, as an executive, need to be more familiar with data management and data sources. For most business people, this is a rather radical change, much more radical than letting some business people do some IT work. However, today's business is transforming (mostly already) into a data management company that happens to sell goods or services. Compared to managing sales, today's CEO role needs to pay more attention to the data input and output of the organization to ensure that the quality of the data is as good as possible. This is not just to respond to compliance requirements, but because the integrity of the data is critical to the success of these companies in the market. This means that you need to determine with your executive data team the scope of information you need to know and want to know, and what information is irrelevant, and then establish the necessary processes to collect data related to business needs. Directly pointing to an interface of the database and extracting its content will have no effect other than increasing disk storage overhead. Hiring data scientists to analyze junk data will only produce junk analysis. If you care, it may be beautiful, full of gradients and 3D effects, but it's useless.

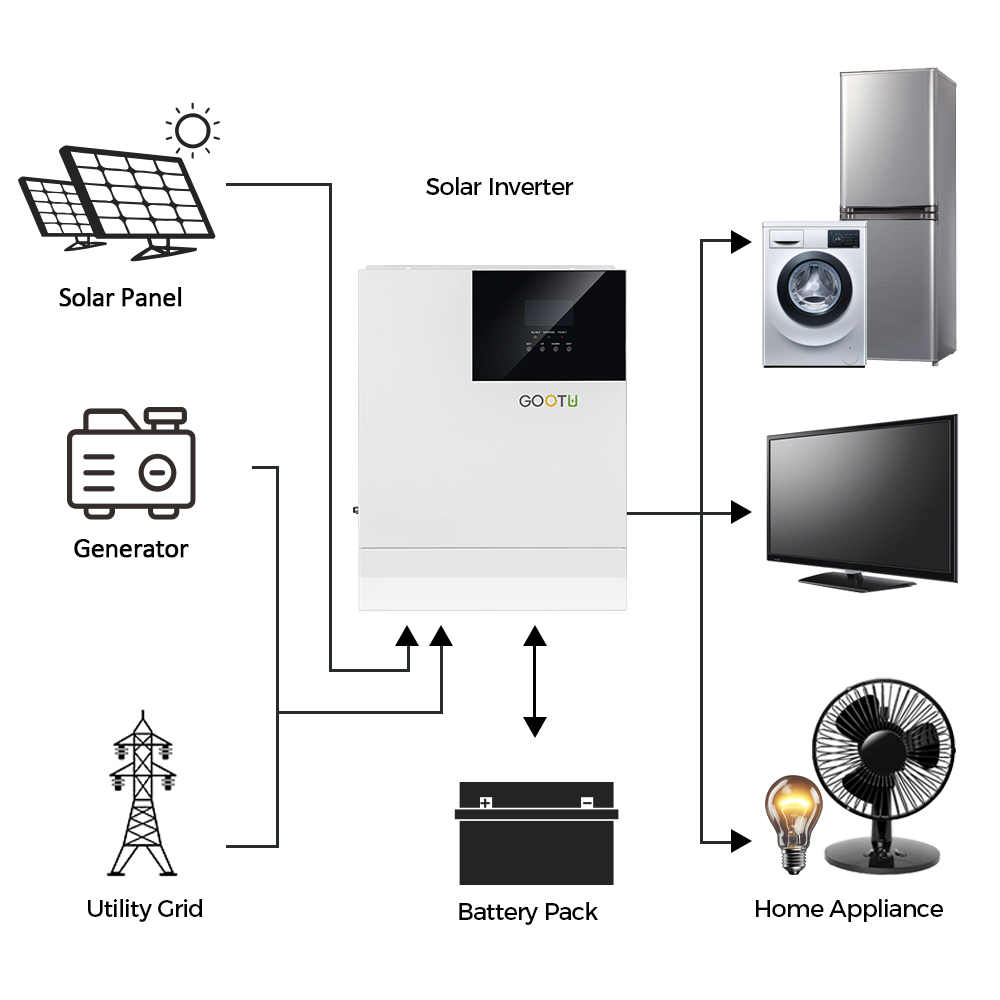

Solar inverter is a type of electrical converter that converts the variable DC output from a PV solar panel into an (AC) output which is at utility frequency which can be directly fed to power appliances or fed directly into the electrical grid. Solar power inverters have extra functions for optimizing the output of PV arrays, like maximum power point tracking and anti-islanding protection.

Solar inverter or PV inverter is one of the most critical components of the solar power system and is often referred to as the heart of a solar PV system. It converts DC (like 12V/ 24V/ 48V) electricity from solar panel into AC (like 120V/ 230V/ 240V ) power required to run your applicance.

GOOTU High efficiency single phase solar inverters for residential applications - the perfect choice for a single or multi-family house and for small commercial PV plants.

A single-phase inverter converts the DC power generated by your solar panels into a single phase of AC power that you can use. This is how your home or business is able to make effective use of the energy generated by your solar panels.

220VAC Solar Inverter,230VAC Solar Inverter,One Phase Solar Inverter,DC To AC Solar Inverter,12V To 220V Solar Inverter Shenzhen Jiesai Electric Co.,Ltd , https://www.gootuenergy.com