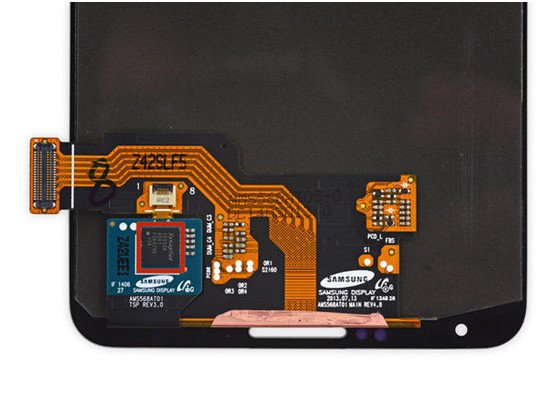

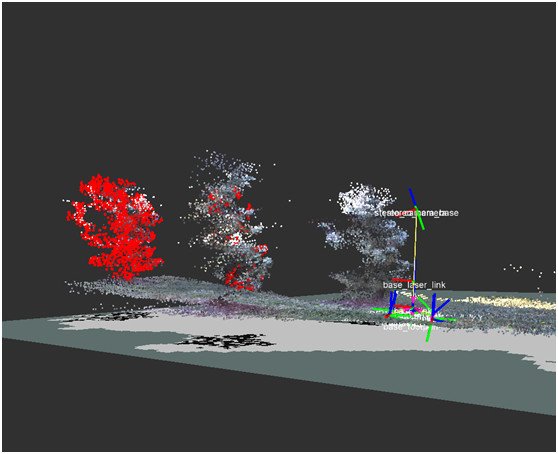

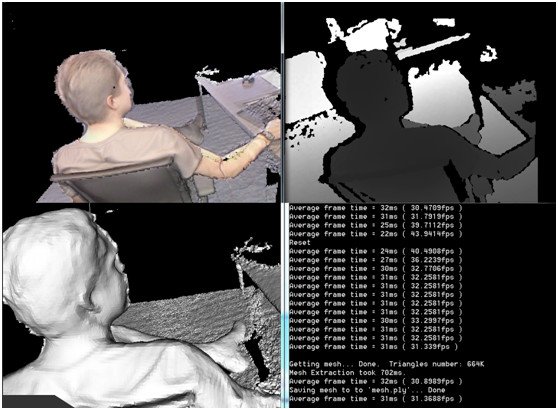

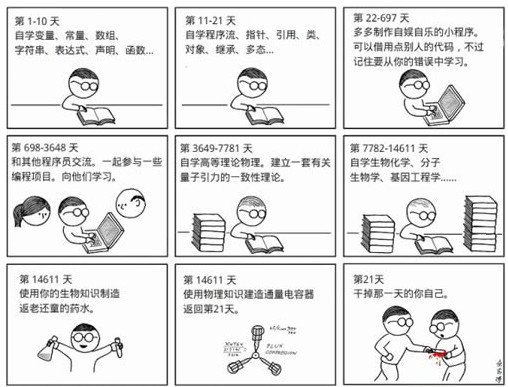

Microsoft HoloLens MR helmet attracted a lot of users attention, and at this stage there are almost no HoloLens devices in the market. So what's the technical challenge for the development of this device? Let's take a look at it together. May wish to simplify the problem first, first as a common class of Oculus VR virtual reality glasses to achieve. First of all, you need to be able to get the display used for screen rendering: In this era of high competition, the display screen with OLED is simply the best choice. The delay time of the TFT LCD screen from the screen itself is enough to allow You directly lose at the starting line; then resolution, the so-called "2K" screen (2560 x 1440) will be the main product from the end of the year to the beginning of next year, because everyone has already had enough ambiguity on all VR content screens now and Sawtooth; then there is a high refresh rate of no less than 75Hz, sufficient guarantee immersive FOV angle, low persistence, and so on - of course, the premise of honoring the above parameters is that you can get stable OLED supply: if Samsung pulls If a long donkey doesn't look at your low head, then you may only be able to spend a few days on it. Then you need two lenses placed in front of the display, either spherical or aspherical, with different focal lengths, PMMA material, high transmittance, very low dispersion, and if necessary re-injection to improve impact resistance. What do you buy at the Chiba Eyeglass Store? Then IMU, a nine-axis sensor consisting of an acceleration/gyroscope/geomagnetic sensor, is used to determine the spatial posture of the helmet. Choosing the highest possible output frequency means a lower sensor delay, and the necessary filtering and interpolation allows the output to reject interference and smooth out the wire. Oh? Use a handle to control it? Then don't do HoloLens. Be a smart excavator. Oh, yes, there is a steady cooling performance, at least two additional fans are installed to prevent the greasy skin of the dead house from throwing out a layer of outdated white fog. And perfect power management mechanism, do not think that Oculus has done a good job here, the latest version of DK2 on the Switchable Video Card completely blew bloody facts are in front of us. Setup Guide and GPU Compatibility Above, no consideration is given to structural design, tooling, plate making, mold opening, mass production, assembly, materials, user comfort, data protocols, lens distortion, SDK interfaces, Demo examples, pushes, patents, and students who may sue you for radiation violations Parents... this series of other things seems to be unimportant, but by the time necessary to minutely ask you for the ugly known needs, we may have been able to make a VR eyeglass that seems to be almost as fast as it could be. . . Where is this where? Assume now that we or our investors believe in the principle of "How bold are people and how productive they are". To achieve this, a HoloLens-like augmented reality glasses product should be implemented on the basis of the above. This is simple - is not more than two camera, sesame big thing. Well, what is needed now is a binocular camera module. In order to maximize the authenticity of the augmented reality, its resolution and refresh rate should not be lower than the VR content display at least; In the process of high-speed motion blur and tear (do not forget your TMP IMU), it should also have the characteristics of a global shutter, so that each frame is clearly visible; Oh, and the lens parameters should also be able to correspond. So, the price of a global shutter camera module (which is not a binocular target) of about 2K resolution, >75FPS, and >120 degree FOV is about to be found. Well, assuming that investors are able to accept such a price that is not too far off the mark, you'll have to split three heads and six arms to take down the responsibility for remastering, FPGA processing, hard clock synchronization, and single or multiple signal output, and Another classic computer vision problem that has to face: Calibration - because if you don't calibrate the screen, do not align and correct the matching relationship between the VR content and the camera screen, your product may only be used to superimpose on the shooting screen. A CCTV logo only. It's really troublesome. Actually, I have a good idea. Use transparent screens to express what is in front of me? Then, superimpose VR scenes? It sounds good, but - In the existing liquid crystal transparent display technology, the biggest problem is that the back plate with the lighted lamp tube is lost, so a soft fluorescent light box is needed to make up for it. Is this kind of supplemental light filling your eyebrows or brains? Or... In short, before material scientists tell you that the world has revolutionized and new technologies have become cheaper, they still quietly use existing ones. Mature programs are better. Okay, okay, it's all done. No one knows how your module fits over the eyeglasses and makes sure that this rigid connection doesn't resonate at constant speed. Below we need to put another PC into it... There's nothing wrong with this. Imitate HoloLens. People also have built-in CPUs and GPUs to do the necessary calculations, and devices like Intel Atom or MK802 or Raspberry Pi are mature enough to stuff your spectacles. Go to. However, the old enemy also rekindled: heat and power management. It may be that the CPU that performs full-load computing every now and then, and the battery pack and voltage regulator module that may have to be added to get rid of the cable, do not know the equipment and the board that users are unsightly in order to meet these uncertainties. Have you already exhausted the last bit of blood in your body? But this did not finish, the bulk has not played yet: SLAM and instant positioning. Maybe you remember Kinect Fusion of that year, and the thrill of achieving spatial point cloud generation and 3D reconstruction with only one RGBD camera. And there are open source Kinfu and their highly efficient SLAM algorithm can be referenced, in an unknown environment, quickly identify and generate maps based on color and depth information, and determine its position in the map. Although this algorithm without external input feedback still has the accumulation and offset of errors, but with the continuous efforts of scientists and you, I believe that it has the ability to integrate it into a U disk-sized ARM system, and it is small and beautiful. With the general computing power of a non-heating, high-performance GPU, it can accept 2K binocular camera input, reconstruction and spatial positioning. What a great achievement this is --- Wait, what have we missed? You say RGBD camera? You say depth? Did the binocular camera module just do it? Of course not. So how do you do it technically? TOF. So what is TOF? Oh... Rely on, white busy. Maybe otherwise, because re-finding a 2K RGBD camera with a proprietary binocular high-speed global shutter may not seem easy, and depth and 3D scene reconstruction and real-time positioning may not be able to withstand that level of high resolution, or Now is it okay to ask if your CPU and fan are OK? So let us not identify the depth information alone, as HoloLens might do. Great, although I don't know how you came here, but suppose you did it through the study and practice of various professional knowledge and continuous efforts for 21 consecutive days. Now is the time for us to introduce this latest result - And so on, how do users use ah? People in Microsoft video so 牛逼 effect, you let å’± æž æž æž æž æž æž æž æž å‡ºæ¥ å‡ºæ¥ å‡ºæ¥ ? ? ?? Is there a Unity package? Is there an Unreal package? Is there an underlying graphics interface? Why don't you just have DX11 don't provide DX9? Why don't you provide MSAA with OpenGL? Why is Visual Studio 2012 only for your demonstration project? Why do you not support Intel high-performance core cards? Why not support mobile versions? Why not support other systems? Why? Also, why are you looking so unwieldy? The prototype is? Redesign it, make it, open it, miniaturize it, demonstrate it, test it, feedback, design it, make it, Open it... As for the popularity of what is VR, what is AR, what is the mixed MR; how to place 3D models in space, how to do according to the depth of smoothing and occlusion, how to do real-time lighting rendering and match with the real scene ... that is enough to put To another topic. Moreover, all the above topics are not covered at all; and because the younger brother is limited by my ability and knowledge, the knowledge of omissions, errors, and errors is equally numerous. So this is not a single shot, or the weight that a problem can bear. . . As the author himself said, the answer does not involve all relevant knowledge. However, in Xiaobian's opinion, this has also allowed users to understand the difficulty of the development of this device. We also hope that Hololens can become cheaper and better, allowing users to enjoy the charm of MR in the future.

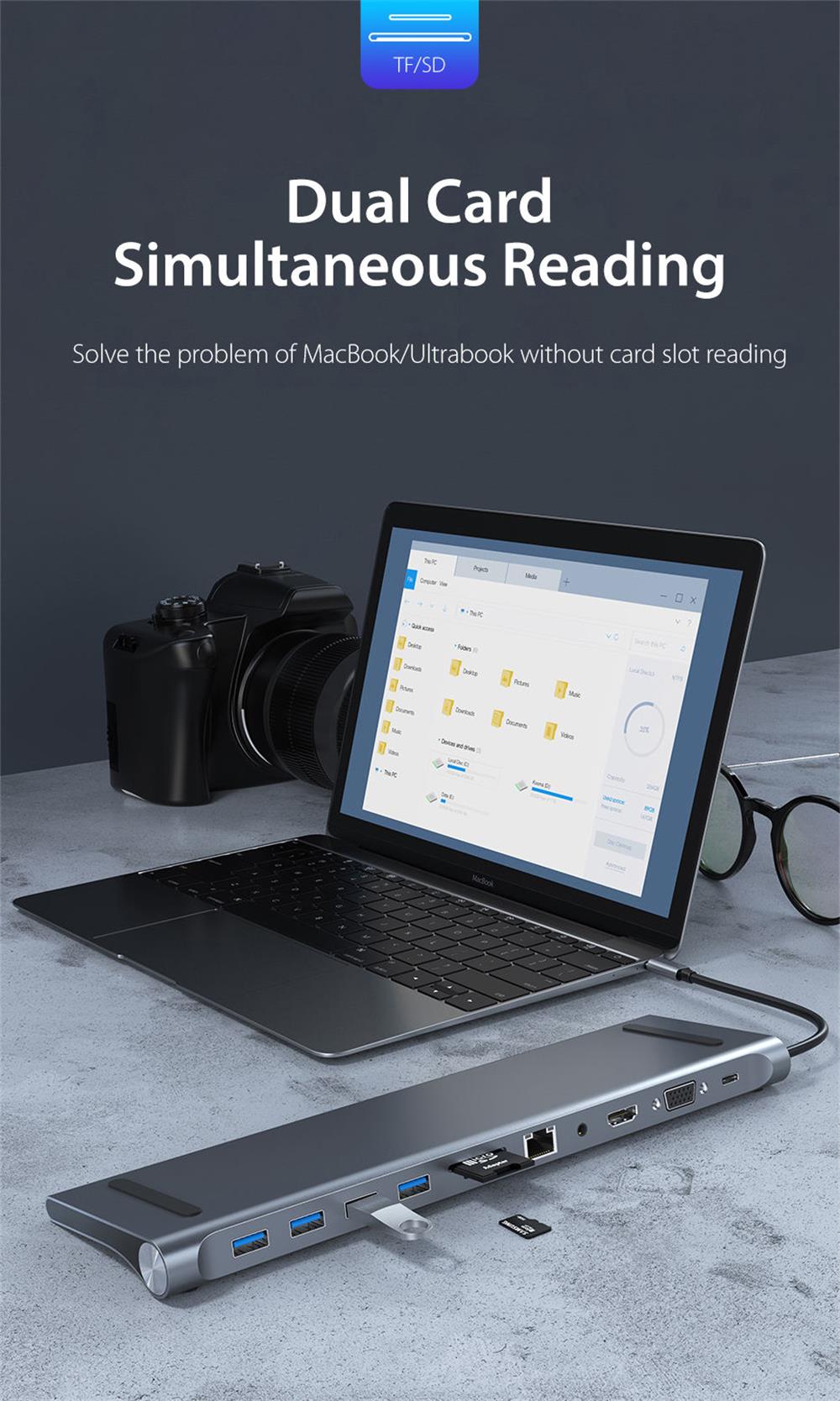

The USB HUBS is also known as a port replicator which is an external device designed for laptop computers. By copying or even extending the port of the notebook computer, the notebook computer can be easily connected with multiple accessories or external devices (such as power adapter, network cable, mouse, external keyboard, printer and external monitor) in one stop.

Usb Hubs,Multi Usb Adapter,Usb C Desktop Hub,Usb 3.0 Multi Port Hub Henan Yijiao Trading Co., Ltd , https://www.yjusbhubs.com

The USB C Hubs has both the functionality of a port replicator and is used to extend to a considerable degree of desktop functionality. Especially suitable for professionals, such as the need for more interface equipment. Using the laptop docking station for company, home or business presentations can also increase the ease of use and excellent scalability of the notebook. For example, consider using the UltraBay interface on the docking station to obtain an optical drive, burner, battery, numeric keypad, hard disk and other expansion functions.

The biggest use of the mini Type C Usb Hub is port expansion. As it is known to all, laptop computers are much less than desktop computers due to their own size limitations, both in terms of the number of ports and types, and sometimes it is difficult to meet the needs of users. The mini dock, on the other hand, offers multiple port extensions, like a laptop that suddenly has three heads and six arms. Some ports are most laptops do not have their own, such as serial port, PS/2, DVI, IBM dedicated floppy drive port. Some are more numerous than the body of a laptop, such as USB ports. These numerous ports allow the laptop to connect to more peripherals at the same time, improving the performance of the entire laptop and helping to increase productivity.

What technologies are needed to develop a Hololens-like product?

Together with MR; how to place 3D models in space, how to do depth-based smoothing and occlusion, how to do real-time lighting renderings and matching with real scenes... that's enough for another topic. Moreover, all the above topics are not covered at all; and because the younger brother is limited by my ability and knowledge, the knowledge of omissions, errors, and errors is equally numerous. So this is not a single shot, or the weight that a problem can bear. . . As the author himself said, the answer does not involve all relevant knowledge. However, in Xiaobian's opinion, this has also allowed users to understand the difficulty of the development of this device. We also hope that Hololens can become cheaper and better, allowing users to enjoy the charm of MR in the future. ">

â–¼

What technologies are needed to develop a Hololens-like product? From Baidu VR