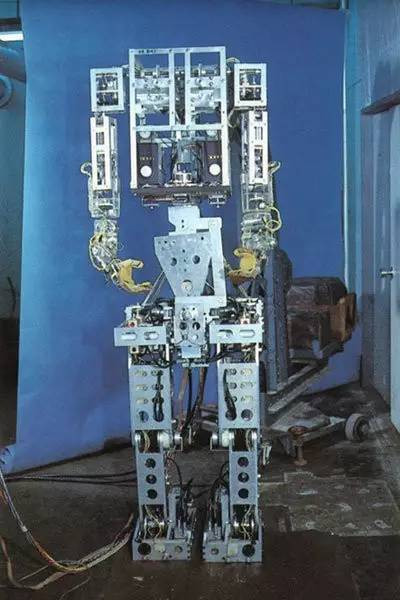

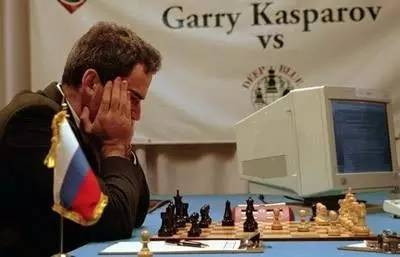

From theologians to scientists to writers, countless people are portraying AI to us. In the long history of hundreds of years, the face of AI has gradually become clear. Recently, Forbes published an article that revised a "short history" for AI. Through this "simplified history," humans can understand the past and present of AI, and the future. 1308: The Catalan poet and theologian Ramon Llull publishes the work "Ars generalis ulTIma" (meaning the ultimate integrated art), explaining in detail his theory: using mechanical methods from a series Create new knowledge in a combination of concepts. A face value of 1.30 euros, issued 220,000 Raymond commemorative stamps 1666: The mathematician and philosopher Gottfried Leibniz publishes On the Combinatorial Art, which inherited the ideas of Raymond Lour. Leibniz believes that all human creativity comes from a few simple concepts. Combination of. 1726: British novelist Jonathan Swift publishes Gulliver's Travels, in which he describes a machine called "Engine", which is placed on Laputa Island. Swift described: "The use of practical and mechanical methods of operation to improve people's speculative knowledge." "The most ignorant person, as long as the appropriate tuition fees, and a little physical strength, you can not rely on any genius or scholastic ability, Write a book about philosophy, poetry, politics, law, mathematics, and theology." 1763: Thomas Bayes creates a framework that can be used to reason about the possibility of an event. Finally, Bayesian inference method becomes the main theory of machine learning. 1854: George Boole believes that logical reasoning can be done systematically, just like solving equations. 1898: Nikola Tesla presents the world's first radio-controlled ship at the electrical exhibition at Madison Square Garden. According to Tesla's description, ships have "borrowed ideas." Nikola Tesla's "remote boat" 1914: Spanish engineer Leonardo Torres y Quevedo shows the world's first machine that can automatically play chess. Without human intervention, the machine can automatically play chess. 1921: The Czech writer Karel apek first used the term "Robot" in his work "Rossum's Universal Robots". The term comes from "robota". 1925: Radio equipment company Houdina Radio Control showcases the world's first wirelessly controlled driverless car that can travel on the streets of New York. 1927: The sci-fi movie "Metropolis" is released. In the film, a rural girl named Maria is a robot, and she caused riots in Berlin in 2026. This is the first time the robot has appeared on the screen, and later the "C-3PO" in "Star Wars" was inspired by it. The earliest "robot" image Maria 1929: Makoto Nishimura designs "Gakutensoku". In Japanese, the name means "learning from the law of nature", which is the first robot made in Japan. The robot can change the facial expression and move the head and hands through the air pressure mechanism. 1943: Warren S. McCulloch and Walter Pitts, in the Mathematical Biophysics Bulletin, "A Logical Calculus of Intrinsic Thoughts in Neurological Activities" The Ideas Immanent in Nervous AcTIvity). This paper has a huge impact on later generations. It discusses idealized, simplified artificial neural networks and how they form simple logic functions. The computer neural network (and the last deep learning) that was born later was inspired by it. The so-called "simulated brain" is also derived from it. 1949: Edmund Berkeley publishes "Giant Brains: Or Machines That Think," which he wrote in his book: "There has been a lot of news recently, talking about the strange giant machine processing information, which is extremely fast. The skill is very strong... This machine is similar to the brain, consisting of hardware and cables, not flesh and blood... The machine can process information, can calculate, can draw conclusions, can choose, and can perform reasonable operations according to information. In short, this machine can think." 1949: Donald Hebb publishes "OrganizaTIon of Behavior: A Neuropsychological Theory," he proposes a theory: According to conjecture learning, these guesses are highly correlated with neural networks, and over time, neuronal synapses Will be strengthened or weakened. 1950: Claude Shannon publishes "Programming a Computer for Playing Chess", the first time this paper began to focus on the development of computer chess programs. 1950: Alan Turing publishes the paper "CompuTIng Machinery and Intelligence", in which he talks about the concept of "imitation game", the well-known "Turing test". "A father of artificial intelligence" Alan Turing 1951: Marvin Minsky and Dean Edmunds develop SNARC, short for "Stochastic Neural Analog Reinforcement Calculator", meaning "random neural network simulation reinforcement calculator" ". SNARC is the first artificial neural network to simulate the operation of 40 neurons with 3,000 vacuum tubes. 1952: Arthur Samuel develops the first computer checkers program, which is also the first program in the world to learn on its own. August 31, 1955: The expert first proposed the term “AI (artificial intelligence)†in a proposal. The proposal suggested that a group of 10 experts should spend 2 months studying artificial intelligence. The proposal is Dartmouth College John McCarthy, Harvard University Marvin Minsky, IBM Nathaniel Rochester, and Bell Telephone Lab Submitted jointly by Claude Shannon. In July and August 1956, the seminar was officially held, and this meeting became the symbol of the birth of artificial intelligence. December 1955: Herbert Simon and Allen Newell develop "Logic Theorist", the world's first AI project at Russell and Whitehead. The second chapter has 52 theorems, and AI can prove 38 of them. 1957: Frank Rosenblatt develops "Perceptron", an artificial neural network that uses a two-layer computer to learn network recognition patterns. According to the New York Times, Perceptron is the prototype of an electronic computer. The US Navy predicts that it can walk, talk, see things, write, or produce itself and feel its existence. "New Yorker" thinks it is a "great machine...can think." 1958: John McCarthy developed the programming language Lisp, which eventually became the most popular programming language for AI research. John McCarthy 1959: Arthur Samuel coined the term "machine learning," in which he talked about a program that lets computers learn how to play chess, and chess can go beyond program developers. 1959: Oliver Selfridge publishes the paper "Pandemonium: A paradigm for learning", in which he describes a processing model that allows a computer to identify new patterns without the need for pre-setting. . 1959: John McCarthy publishes the book "Programs with Common Sense." In his paper, he talked about Advice Taker, a program that solves problems by controlling sentences in formal languages. Its ultimate goal is to develop programs that can learn from past experiences like humans. 1961: The first industrial robot, Unimate, was put into use on the assembly line of General Motors in New Jersey. 1961: James Slagle develops SAINT (Symbol Automatic Integration Program), a heuristic program that effectively solves the problem of university first-year calculus integration. 1964: Daniel Bobrow completes the paper "Natural Language Input for a Computer Problem Solving System", his doctoral thesis at MIT. Bobro also developed STUDENT, a natural language understanding computer program. 1965: Herbert Simon predicts that within 20 years, machines can do what humans can do. 1965: Herbert Dreyfus publishes "Alchemy and AI". He believes that intelligence is not the same as computer. Some restrictions cannot be broken, which directly leads to the inability of AI to improve. In 1965, IJGood wrote in "Speculations Concerning the First Ultraintelligent Machine": "The first super-smart machine will be the ultimate invention of mankind. The machine is docile enough to tell us how to control it." 1965: Joseph Weizenbaum develops ELIZA, an interactive program that allows English conversations on any subject. Some people want to give human feelings to computer programs, and Weissenban is shocked. He developed the program just to prove that the communication between the machine and the person is superficial. 1965: Edward Feigenbaum, Bruce G. Buchanan, Joshua Lederberg, and Carl Djerassi study DENDRAL at Stanford University. The first expert system is capable of making decisions automatically and solving organic chemistry problems. Its purpose is to study hypothetical information and construct a scientific experience induction model. 1966: Robot Shakey was born, it is the first mobile robot developed based on general purpose, which can form its own actions logically. In 1970, Life magazine called it the "first electronic person." The article also quoted computer scientist Marvin Minsky as saying: "In three to eight years, the intelligence of the machine can reach the average of ordinary people." 1968: The film "2001 Space Roaming" is released. The character Hal in the movie is a computer with perception. 1968: Terry Winograd develops SHRDLU, a computer program that understands early languages. 1969: Arthur Bryson and Yu-Chi Ho describe in the paper that backpropagation can be used as a multi-stage dynamic system optimization method. This is a learning algorithm that can be applied to multi-layer artificial neural networks. The success of deep learning in 2000-2010 is inseparable from its inspiration. In the days that followed, computer performance advanced by leaps and bounds, and it can adapt to huge networks. training. 1969: Marvin Minsky and Seymour Papert jointly publish the work Perceptrons: An Introduction to Computational Geometry. In 1988, the book was expanded and republished. The two authors stated in the book that the conclusions drawn in 1969 significantly reduced the funds needed for neural network research. The authors said: "We believe that due to the lack of basic theory, research has been basically stagnant... In the 1960s, people conducted a lot of experiments on cognitive calculus, but no one could explain why the system can identify a particular model. Other modes are not recognized." 1970: The first anthropomorphic robot was born. It is WABOT-1, developed by Waseda University, Japan. It includes limb control systems, vision systems, and conversation systems. WABOT-1 1972: MYCIN is an early expert system that identifies bacteria that cause serious infectious diseases and also recommends antibiotics, which was developed by Stanford University. 1973: James Lighthill reports to the UK Scientific Research Council about the current state of AI research. He concludes: "So far, the discovery of AI in various fields has not brought as expected. Significant impact.†The final government’s enthusiasm for AI research declined. 1976: Computer scientist Raj Reddy publishes the paper "Speech Recognition by Machine: A Review," which summarizes the early work of Natural Language Processing. 1978: Carnegie Mellon University develops the XCON program, an expert system based on rule development that assists the DEC VAX computer in automatically selecting components based on customer requirements. 1979: Without human intervention, Stanford Cart automatically travels through the room full of chairs for 5 hours, which is equivalent to an early driverless car. 1980: Waseda University of Japan developed the Wabot-2 robot, a humanoid music robot that can communicate with people, read scores, and play keyboards of ordinary difficulty. 1981: The Japanese Ministry of International Trade and Industry invested $850 million in the "Fifth Generation Computer" project. The project only developed computers that could talk, translate language, interpret pictures, and reason like humans. 1984: "Electric Dreams" movie, which tells the story of a love story of a man, a woman and a computer. 1984: At the annual AAAI meeting, Roger Schank and Marvin Minsky warned that "AI Winter" has arrived and the AI ​​bubble will soon burst, AI Investment and research funding has also decreased, just as it did in the 1970s. 1986: Under the direction of Ernst Dickmanns, the University of Munich developed the first driverless car, a Mercedes van with a camera and sensors. No one in the seat. The top speed is 55 miles per hour. 1987: Apple's then-CEO John Sculley gave a keynote speech at Educom about the concept of "Knowledge Navigator", which described an enticing future: "We can connect with smart agents. Knowledge applications, agents rely on the network and can be connected to a large amount of digital information." 1988: Judea Pearl publishes Probabilistic Reasoning in Intelligent Systems. In 2011, Pearl received the Turing Award. The award presentation stated: "Judia Pearl is dealing with uncertainty. The information finds the figurative feature and lays the foundation for calculation. It is considered that he is the inventor of the Bayesian network. The Bayesian network is a set of data formal systems that can determine complex probability models and can also become the dominant in the inference of these models. algorithm." 1988: Rollo Carpenter develops the chat bot Jabberwacky, which simulates human conversation in a fun, entertaining, and humorous form. Carpenter made a unique attempt to develop AI using a method of interacting with people. 1988: IBM Watson Research Center publishes "A statistical approach to language translation", which marks the beginning of the transition. Previously we used a rule-based machine translation probabilistic method, which began to shift to "machine learning". Machine learning is Based on the data analysis of known cases, not the understanding of the task on the opponent. IBM's project name is Candide, which translates successfully between English and French. The system is based on 2.2 million pairs of sentences. 1988: Marvin Minsky and Seymour Papert publish the book Perceptrons, which was first published in 1969 and re-released in 1988. The two explained the reasons for the reprint: "Why didn't the research in the AI ​​field make a breakthrough? Because the researchers are not familiar with history, they always make mistakes that some predecessors have already made." 1989: Yann LeCun teamed up with other researchers at AT&T Bell Labs to successfully apply backpropagation algorithms to multi-layer neural networks, which recognize handwritten zip codes. It took three days to train the neural network because of the limitations of the hardware at the time. 1990: Rodney Brooks publishes "Lypants Don't Play Chess," and he proposes a new AI approach: re-engineering intelligent systems and special robots with environmental interactions. Brooks said: "The world is our best model... The key is to perceive it correctly and maintain a high enough frequency." 1993: Vernor Vinge publishes The Coming Technological Singularity. He predicts that within 30 years, we can use technology to create super-smart people. In short, humans will end. 1995: Richard Wallace develops the chat robot ALICE (short for Artificial Linguistic Internet Computer Entity), which was inspired by ELIZA. Since the Internet has emerged, the network has provided Wallace with a vast amount of natural Language data sample. 1997: Sepp Hochreiter and Jürgen Schmidhuber propose the LSTM concept (long-term and short-term memory), which is developed today using recurrent neural networks. It recognizes handwriting and recognizes speech. 1997: Deep Blue, which was developed by IBM, defeated the human chess champion. Chess champion Kasparov defeated by Deep Blue 1998: Dave Hampton and Caleb Chung develop Furby, the first home robot, or pet robot. 1998: Yann LeCun co-authored a paper on the use of neural networks to identify handwritten handwriting and the issue of optimizing backward propagation. 2000: MIT researcher Cynthia Breazeal develops Kismet, a robot that recognizes and simulates expressions. 2000: Honda unveiled ASIMO, an artificial intelligence anthropomorphic robot that can walk as fast as humans and deliver dishes to guests in restaurants. 2001: Spielberg's film "Artificial Intelligence" is released. A robot in the film is very similar to a human child. His program is unique and has the ability to love. 2004: The first DARPA Autopilot Challenge was held in the Mojave Desert, but unfortunately there wasn't a self-driving car that fulfilled the 150-mile challenge. 2006: Geoffrey Hinton publishes "Learning Multiple Layers of Representation". He first proposed the term "machine reading". The so-called machine reading means that the system can automatically learn text without human supervision. . 2007: Geoffrey Hinton publishes "Learning Multiple Layers of Representation". According to his vision, we can develop a multi-layer neural network that includes top-down connection points that can be generated. Sensory data training systems, rather than training by classification. The theory of Hinton guides us to deep learning. 2007: Fei Fei Li and colleagues at Princeton University work together to start researching ImageNet, a large database of a large number of annotated images designed to aid visual object recognition software research. 2009: Rajat Raina, Anand Madhavan and Andrew Ng publish the paper "Large-scale Deep Unsupervised Learning using Graphics Processors", which they believe "the computing power of modern graphics processors far exceeds that of many cores." CPU and GPU have the power to revolutionize deep unsupervised learning methods." 2009: Google began secretly developing driverless cars. In 2014, Google passed the autopilot test in Nevada. In 2009, researchers at the Intelligent Information Laboratory at Northwestern University developed Stats Monkey, a program that automatically writes sports news without human intervention. 2010: ImageNet Large-Scale Visual Identity Challenge (ILSVCR). 2011: In the German traffic sign recognition competition, a convolutional neural network became the winner, with a recognition rate of 99.46% and a human being of 99.22%. 2011: The natural language question and answer computer developed by IBM Watson defeated two former champions in Jeopardy! 2011: The Dalle Molle Institute of Artificial Intelligence in Switzerland released a report saying that the use of convolutional neural networks to identify handwritten handwriting has an error rate of only 0.27%. In the past few years, the error rate was 0.35-0.40%. June 2012: Jeff Dean and Andrew Ng release a report that describes an experiment they completed. The two showed 10 million unlabeled images to large neural networks that were randomly selected from YouTube videos and found that one of the artificial neurons was particularly sensitive to cat pictures. October 2012: The Convolutional Neural Network designed by the University of Toronto participates in the ImageNet Large-Scale Visual Identity Challenge (ILSVCR) with an error rate of only 16%, a significant improvement over the 25% error rate in previous years. March 2016: AlphaGo, developed by Google DeepMind, defeated the chess champion Li Shishi. March 2017: Google announced at the Cloud Next conference in San Francisco a new machine learning API that automatically recognizes objects in the video and makes them searchable. The API, called Video Intelligence, will allow developers to develop applications that automatically recognize objects in the video.

We are manufacturer of Solar Inverter in China,OEM and ODM are acceptable, if you want to buy Off Grid Hybrid Inverter,Solar Power Inverter, please contact us.

Solar Power Inverter,Solar Inverter,Hybrid Inverter,Solaredge Inverter,Solar Power Inverter suzhou whaylan new energy technology co., ltd , https://www.nbwhaylan.com