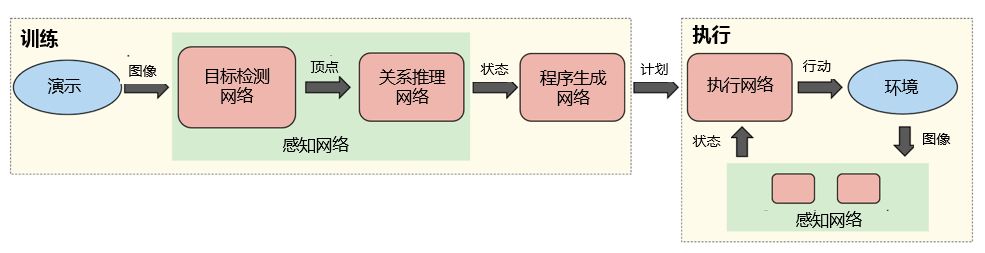

Recently, the NVIDIA research team pioneered the development of a system based on deep learning that teaches robots to perform tasks only by observing human behavior. This method aims to enhance the communication between humans and robots while advancing the research process of humans and robots working together seamlessly. In his thesis, the researchers stated: "In order for the robot to perform useful tasks in the real world, it must simply communicate the tasks to the robot; this includes the expected results and any hints related to the best way to achieve the results. Demonstrates that users can communicate tasks to robots and provide clues to help robots perform tasks better.†With NVIDIA TITAN X GPUs, researchers trained a series of neural networks that perform tasks related to perception, program generation, and program execution. The results show that robots can learn tasks through a single demonstration in the real world. The working method of this method is: A real-time video stream of a scene is acquired by a camera, and then a pair of neural networks infers the position and relationship of the target in the scene in real time. The resulting senses are transmitted to another network and a plan is created to explain how to reconstruct these perceptions. Finally, the execution network reads the plan and generates actions for the robot; it also takes into account the current state of the scene to ensure that it is robust to external disturbances. After the robot sees the task, it generates a human-readable description of the steps, which is necessary for re-executing the task. This description allows the user to quickly identify and correct any problems that occur when the robot performs a human demonstration reading before the robot performs. The key to this ability is to make full use of synthetic data to train neural networks. Existing neural network training methods require a large number of labeled training data, which is a bottleneck for these systems. By synthesizing data generation, almost unlimited amount of tag training data can be easily generated. This is the first time we have used the image-centric domain randomization method for robots. Domain randomization techniques are used to generate synthetic data with a large amount of diversity and to persuade the perceptual network to believe that the real data seen is just another variant of its training data. Researchers chose to deal with data in an image-centric manner to ensure that the network does not depend on the camera or the environment. The researchers said: "This sensory network is suitable for any solid-state real-world object that can be properly simulated by its 3D bounding cuboid. Even though no real image was observed during training, it is In the case of occlusion, the perception network can still reliably detect the target boundary cube in the real image." In their demonstration, the team used multiple colored blocks and a toy car to train the target detector. The system learns the physical relationships between blocks, such as stacking blocks or placing them adjacent to each other. In the above demonstration video, the human operator presented a set of cubes to the robot. The system then reasoned about its program and placed the cubes in the correct order. Because it takes into account the current state during execution, the system can recover from errors in real time. This week, at the World Robotics and Automation Conference (ICRA) in Brisbane, Australia, researchers will present their research papers and results. The team said that it will continue to explore the application of synthetic training data in the field of robot control, and study the ability to apply this method to more scenarios. Metal Sunglasses,Metal Frame Sunglasses,Round Metal Glasses,Metal Rim Sunglasses Danyang Hengshi Optical Glasses Co., Ltd. , https://www.hengshi-optical.com