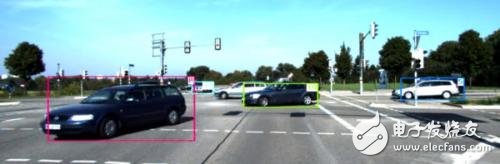

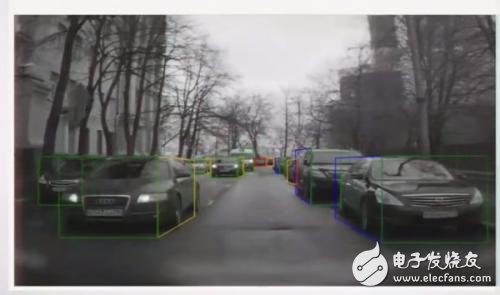

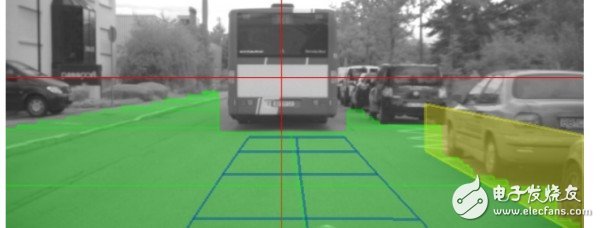

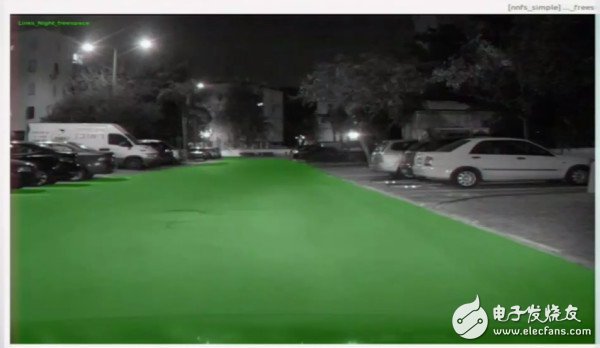

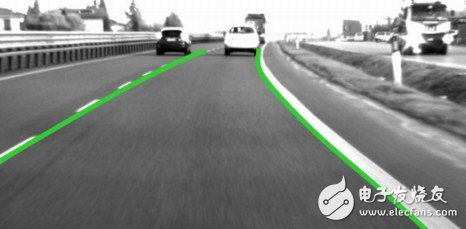

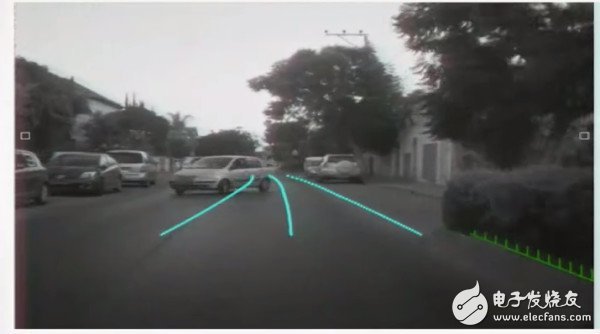

Deep learning is mainly used in image processing in the driverless field, which is above the camera. Of course, it can also be used for radar data processing, but based on the extremely rich information of images and the characteristics of difficult manual modeling, deep learning can maximize its advantages. Now let's talk about the giants in the global camera field, how Israel's mobileye company uses deep learning in their products. Deep learning can be used to perceive, identify the surrounding environment, various information useful to the vehicle, and can also be used for decision making, such as AlphaGo's Policy Network, which is to directly use DNN training to make decisions based on the current state. In terms of environmental identification, mobileye divides their identification work into three parts, object recognition, driving area detection, and driving path identification. Object recognition General object recognition is like this: There is a rectangular frame that identifies where the car is, very good, very good, but Mobileye comes out like this: And this: The obvious difference is that Mobileye can achieve very accurate front and side detection of the car, as well as completely distinguish between the left side and the right side (yellow and blue). The amount of information for these two test results is completely different. The test result on the left tells us where there is probably a car, but his specific location, the car's orientation information is completely absent. However, from the test results on the right, it is possible to estimate the position of the car, the direction of travel and other important information relatively accurately, which is similar to what we can speculate after seeing it. This outstanding result, for vehicles at a closer distance, using other geometric-based methods, tracking a few more frames, may be able to achieve close results, but pay attention to the car in the far distance, the result is completely correct, it is only possible It is the power of deep learning. Unfortunately, the founders and CTOs of Mobileye always show how their technology is. They often share some techniques before, but in the vehicle identification how to model the neural network can output such a bounding box with orientaTIon, he just smiles and says There are a lot of tricks inside... If anyone knows that there is a similar job in academia, please let me know by private letter. Free space detection There are two methods for detecting the driving area before deep learning. One is based on binocular camera stereo vision or Structure from moTIon, and the other is based on local features, image segmentation such as Markov field. The result is this: The green part is the testable area, and it looks good, right? But note that the green part on the left covers the road "down duck" (Lei Feng net: roadside stone) and the sidewalk part, because "dumping duck" is about ten centimeters higher than the road surface, it is difficult to separate from the road by stereo vision. Come. The traditional image segmentation is also very difficult, because the local features, the "dumping duck" and the color of the road surface are extremely close. Distinguishing between the two requires a comprehensive understanding of the entire context of the environment. This problem has finally been overcome since deep learning can be done with scene understanding: The green part is still the driving area. The height of the road shoulder on the right side of the road is almost the same as the height of the road surface. The color texture is exactly the same, and it is impossible to distinguish it by stereoscopic method. Moreover, not only the boundary of the travelable area is accurately detected, but also why the boundary is detected: Red indicates the boundary between the object and the road. The mouse position indicates the Guard rail, and the previous image should be Flat. This way, under normal circumstances, you know which areas are available for driving, and in an emergency, you can know where you can rush. Of course, compared to the first part, the principle of this part is relatively clear, based on the depth understanding of the scene understanding. The academic world also has pretty good results, such as the picture below (Cambridge's work), the road is very good with the inverted duck (blue and purple): Driving path detection The problem to be solved in this part of the work is mainly the problem of how to drive the car without the vehicle line or the condition of the vehicle line is very poor. If all the road conditions are as follows: That is of course perfect, but due to road conditions or weather, there are times when the vehicle line is difficult to detect. Deep learning provides a solution for this. We can train the neural network with the data of people driving in the road without the lane line. After training, the neural network can judge how the future car can be opened when there is no lane line. This part of the principle is also relatively clear, find a person to drive, save the video of the entire process of driving the camera, and save the driving path of the strategic vehicle. With each frame of picture as input, the vehicle's path for a period of time (very short time) is used as an output training neural network. Comma, which was very hot before, was created by the Black Apple mobile phone. This is the idea, because its reliability and originality have been despised by LeCun. The results are as follows. It can be seen that the driving path provided by the neural network is basically in line with human judgment: More extreme situations: Green is the predicted driving path. Without deep learning, this kind of scene is completely impossible. Of course, I mentioned in another recent answer that I can't rely entirely on neural networks for path planning. Mobileye is also a comprehensive traditional lane line detection. The above mentioned scene segmentation detects the guardrail, etc., this part of the neural network output. Wait, do information fusion and finally get a stable and perfect driving path. India ISI plug/BIS standard safety certification power cord Indian plug standard: plug IS 1293-2005/2019, power cord IS-694-2010, rubber wire IS-1293 SABS South Africa Plug wire/SANS 164-1/3: India ISI plug power cord 2C two cores, 3C three cores Wire model: H05RR-F two-core two-core wire, H07RN-F 2C/3C rubber wire, H03RT-H cotton yarn, H05RN-F rubber wire H05VV-F, H03VV-F wire, H05V2V2-F wire 2C/3C: 0.75mm, 1.0MM, 1.5mm2, 2.5MM2 India Plug Type,Indian Plug Type,Bis 2 Pins Plug,India 2 Pins Ac Power Cord Guangdong Kaihua Electric Appliance Co., Ltd. , https://www.kaihuacable.com